Working any scalable distributed platform calls for a dedication to reliability, to make sure clients have what they want once they want it. The dependencies could possibly be fairly intricate, particularly with a platform as massive as Roblox. Constructing dependable providers signifies that, whatever the complexity and standing of dependencies, any given service won’t be interrupted (i.e. extremely out there), will function bug-free (i.e. excessive high quality) and with out errors (i.e. fault tolerance).

Why Reliability Issues

Our Account Identification group is dedicated to reaching larger reliability, because the compliance providers we constructed are core elements to the platform. Damaged compliance can have extreme penalties. The price of blocking Roblox’s pure operation could be very excessive, with extra sources essential to get better after a failure and a weakened person expertise.

The standard method to reliability focuses totally on availability, however in some instances phrases are blended and misused. Most measurements for availability simply assess whether or not providers are up and working, whereas elements akin to partition tolerance and consistency are generally forgotten or misunderstood.

In accordance with the CAP theorem, any distributed system can solely assure two out of those three elements, so our compliance providers sacrifice some consistency with the intention to be extremely out there and partition-tolerant. However, our providers sacrificed little and located mechanisms to attain good consistency with cheap architectural modifications defined beneath.

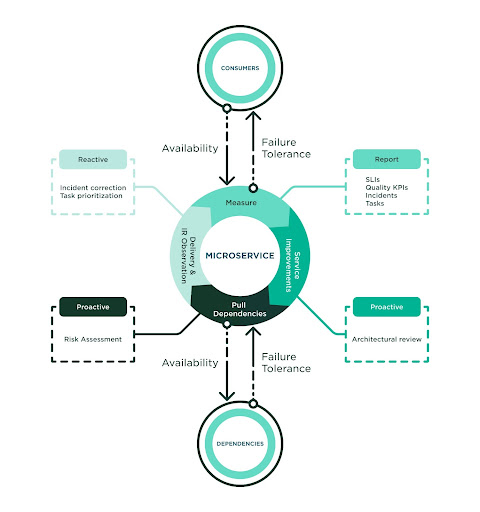

The method to achieve larger reliability is iterative, with tight measurement matching steady work with the intention to stop, discover, detect and repair defects earlier than incidents happen. Our group recognized robust worth within the following practices:

- Proper measurement – Construct full observability round how high quality is delivered to clients and the way dependencies ship high quality to us.

- Proactive anticipation – Carry out actions akin to architectural critiques and dependency threat assessments.

- Prioritize correction – Carry larger consideration to incident report decision for the service and dependencies which are linked to our service.

Constructing larger reliability calls for a tradition of high quality. Our group was already investing in performance-driven improvement and is aware of the success of a course of relies upon upon its adoption. The group adopted this course of in full and utilized the practices as a typical. The next diagram highlights the elements of the method:

The Energy of Proper Measurement

Earlier than diving deeper into metrics, there’s a fast clarification to make relating to Service Degree measurements.

- SLO (Service Degree Goal) is the reliability goal that our group goals for (i.e. 99.999%).

- SLI (Service Degree Indicator) is the achieved reliability given a timeframe (i.e. 99.975% final February).

- SLA (Service Degree Settlement) is the reliability agreed to ship and be anticipated by our customers at a given timeframe (i.e. 99.99% per week).

The SLI ought to mirror the provision (no unhandled or lacking responses), the failure tolerance (no service errors) and high quality attained (no surprising errors). Subsequently, we outlined our SLI because the “Success Ratio” of profitable responses in comparison with the overall requests despatched to a service. Profitable responses are these requests that have been dispatched in time and kind, which means no connectivity, service or surprising errors occurred.

This SLI or Success Ratio is collected from the customers’ standpoint (i.e., purchasers). The intention is to measure the precise end-to-end expertise delivered to our customers in order that we really feel assured SLAs are met. Not doing so would create a false sense of reliability that ignores all infrastructure issues to attach with our purchasers. Just like the buyer SLI, we acquire the dependency SLI to trace any potential threat. In observe, all dependency SLAs ought to align with the service SLA and there’s a direct dependency with them. The failure of 1 implies the failure of all. We additionally observe and report metrics from the service itself (i.e., server) however this isn’t the sensible supply for top reliability.

Along with the SLIs, each construct collects high quality metrics which are reported by our CI workflow. This observe helps to strongly implement high quality gates (i.e., code protection) and report different significant metrics, akin to coding normal compliance and static code evaluation. This subject was beforehand coated in one other article, Constructing Microservices Pushed by Efficiency. Diligent observance of high quality provides up when speaking about reliability, as a result of the extra we spend money on reaching glorious scores, the extra assured we’re that the system won’t fail throughout opposed circumstances.

Our group has two dashboards. One delivers all visibility into each the Shoppers SLI and Dependencies SLI. The second reveals all high quality metrics. We’re engaged on merging every thing right into a single dashboard, in order that all the elements we care about are consolidated and able to be reported by any given timeframe.

Anticipate Failure

Doing Architectural Evaluations is a basic a part of being dependable. First, we decide whether or not redundancy is current and if the service has the means to outlive when dependencies go down. Past the everyday replication concepts, most of our providers utilized improved twin cache hydration strategies, twin restoration methods (akin to failover native queues), or information loss methods (akin to transactional help). These subjects are intensive sufficient to warrant one other weblog entry, however finally one of the best suggestion is to implement concepts that take into account catastrophe eventualities and decrease any efficiency penalty.

One other necessary side to anticipate is something that would enhance connectivity. Meaning being aggressive about low latency for purchasers and getting ready them for very excessive site visitors utilizing cache-control strategies, sidecars and performant insurance policies for timeouts, circuit breakers and retries. These practices apply to any shopper together with caches, shops, queues and interdependent purchasers in HTTP and gRPC. It additionally means bettering wholesome indicators from the providers and understanding that well being checks play an necessary position in all container orchestration. Most of our providers do higher indicators for degradation as a part of the well being examine suggestions and confirm all important elements are useful earlier than sending wholesome indicators.

Breaking down providers into important and non-critical items has confirmed helpful for specializing in the performance that issues essentially the most. We used to have admin-only endpoints in the identical service, and whereas they weren’t used usually they impacted the general latency metrics. Transferring them to their very own service impacted each metric in a constructive course.

Dependency Danger Evaluation is a crucial device to establish potential issues with dependencies. This implies we establish dependencies with low SLI and ask for SLA alignment. These dependencies want particular consideration throughout integration steps so we commit additional time to benchmark and take a look at if the brand new dependencies are mature sufficient for our plans. One good instance is the early adoption we had for the Roblox Storage-as-a-Service. The combination with this service required submitting bug tickets and periodic sync conferences to speak findings and suggestions. All of this work makes use of the “reliability” tag so we are able to shortly establish its supply and priorities. Characterization occurred usually till we had the boldness that the brand new dependency was prepared for us. This additional work helped to drag the dependency to the required degree of reliability we count on to ship appearing collectively for a standard aim.

Carry Construction to Chaos

It’s by no means fascinating to have incidents. However once they occur, there’s significant info to gather and be taught from with the intention to be extra dependable. Our group has a group incident report that’s created above and past the everyday company-wide report, so we deal with all incidents whatever the scale of their influence. We name out the foundation trigger and prioritize all work to mitigate it sooner or later. As a part of this report, we name in different groups to repair dependency incidents with excessive precedence, comply with up with correct decision, retrospect and search for patterns which will apply to us.

The group produces a Month-to-month Reliability Report per Service that features all of the SLIs defined right here, any tickets we have now opened due to reliability and any potential incidents related to the service. We’re so used to producing these studies that the subsequent pure step is to automate their extraction. Doing this periodic exercise is necessary, and it’s a reminder that reliability is consistently being tracked and thought of in our improvement.

Our instrumentation consists of customized metrics and improved alerts in order that we’re paged as quickly as potential when identified and anticipated issues happen. All alerts, together with false positives, are reviewed each week. At this level, sharpening all documentation is necessary so our customers know what to anticipate when alerts set off and when errors happen, after which everybody is aware of what to do (e.g., playbooks and integration pointers are aligned and up to date usually).

Finally, the adoption of high quality in our tradition is essentially the most important and decisive think about reaching larger reliability. We are able to observe how these practices utilized to our day-to-day work are already paying off. Our group is obsessive about reliability and it’s our most necessary achievement. We’ve elevated our consciousness of the influence that potential defects may have and once they could possibly be launched. Providers that applied these practices have constantly reached their SLOs and SLAs. The reliability studies that assist us observe all of the work we have now been doing are a testomony to the work our group has completed, and stand as invaluable classes to tell and affect different groups. That is how the reliability tradition touches all elements of our platform.

The street to larger reliability isn’t a straightforward one, however it’s obligatory if you wish to construct a trusted platform that reimagines how folks come collectively.

Alberto is a Principal Software program Engineer on the Account Identification group at Roblox. He’s been within the recreation trade a very long time, with credit on many AAA recreation titles and social media platforms with a powerful deal with extremely scalable architectures. Now he’s serving to Roblox attain development and maturity by making use of finest improvement practices.